Capturing measures of students’ attitudes toward science has long been a focus within the field of science education. The resulting interest has led to the development of many instruments over the years. There is considerable disagreement about how attitudes should be measured, and especially whether students’ attitudes toward science can or should be measured unidimensionally, or whether separate attitude dimensions or subscales should be considered. When it is agreed upon that the attitudes toward science construct should be measured along separate subscales, there is no consensus about which subscales should be used.

A streamlined version of the modified Attitudes Towards Science Inventory (mATSI), a widely used science measurement instrument, was validated for a more diverse sample as compared to the original study (Weinburgh and Steele in Journal of Women and Minorities in Science and Engineering 6:87–94, 2000). The analytical approach used factor analyses and longitudinal measurement invariance. The study used a sample of 2016 self-reported responses from 6 and 7th grade students. The factor analysis elucidated the factor structure of students’ attitudes toward science, and some modifications were made in accordance with the results. Measurement invariance analysis was used to confirm the stability of the measure.

Our results support that the subscales, anxiety toward science and value and enjoyment of science, are two factors and stable over time.

Our results suggest that our proposed modified factor structure for students’ attitudes toward science is reliable, valid, and appropriate for use in longitudinal studies. This study and its resulting streamlined mATSI survey could be of value to those interested in studying student engagement and measuring middle-school students' attitudes toward science.

In the United States, increasing the numbers of students entering careers in the fields of science, technology, engineering, and mathematics (STEM) has been a priority. Yet, despite these efforts and against the projections of economists which indicate that STEM job opportunities are expected to increase more quickly than the combined average of other fields in the coming years (Lacey & Wright, 2009; Wang, 2013), there is a decline in students entering STEM fields (Lewin & Zhong, 2013). Science education researchers have demonstrated the connection between interest in science careers during K-12 educational experiences and eventual participation in science careers (Maltese & Tai, 2011; Sadler et al., 2012; Tai et al., 2006). Desire to pursue a STEM career or STEM coursework is considered to be closely linked to attitudes about science (Potvin & Hasni, 2014), and in fact many attitudes toward science instruments have included a STEM career component (Kind et al., 2007; Romine et al., 2014; Unfried et al., 2015). Apart from these workforce development-focused concerns is the potential threat to the growth and promotion of scientific literacy that declining interest and declining positive attitudes toward science may threaten the promotion of scientific literacy (Osborne et al., 2003), and lead to negative ramifications for both individual citizens and society as a whole. As a result, identifying an instrument with the potential to be used for longitudinal analysis is crucial. The aim of this study is to offer such an instrument with analysis to support both its shortened format to allow for more efficient data collection and its viability as a longitudinal measurement instrument.

Student attitudes towards science have been a focal area of research in science education for decades (Buck et al., 2009; Haladyna & Shaughnessy, 1982; Lamb et al., 2012; Osborne et al., 2003; Rennie & Punch, 1991; Romine et al., 2014; Shirgley, 1990; Simpson & Oliver, 1990; Unfried et al., 2015), (Schibeci, 1984). The relationship of student attitudes toward science with factors such as gender, science class instructional strategies, and age has been well-studied (Catsambis, 1995; Pell & Jarvis, 2001; Sorge, 2007). Age or grade level in particular has exacted notice, as there is concern about the documented decline of attitudes toward science as students increase in age and progress through school (Osborne et al., 2003), a trend that is especially pronounced among girls and young women (Barmby et al., 2008). This phenomenon, along with existing underrepresentation of women in many STEM fields, highlights the need for more longitudinal studies to investigate this trend.

Developing instruments that reliably capture the construct of attitude toward science has proved particularly challenging for many reasons (Lamb et al., 2012; Osborne et al., 2003; Unfried et al., 2015; Weinburgh & Steele, 2000). One reason is that there is a long-standing question about whether attitudes toward science can be measured as a single construct, or whether there are distinct sub-components within attitudes towards science that should be measured separately, and if so, which sub-components should be measured separately (Gardner, 1974, 1995; Osborne et al., 2003). This uncertainty is not surprising since, if the affective component of attitudes is considered as the beliefs a person has about an object, then we would expect those beliefs to vary based on the object we are considering, and how narrowly we subdivide aspects of that object (Kind et al., 2007). For instance, one can consider attitudes toward school science as one construct, or consider subcomponents of school science such as attitudes toward the science teacher, classroom, and/or content (Kind et al., 2007). Due to this distinction, it is not surprising that many underlying subscales of student attitudes toward science have been identified. As a result, many different instruments have been developed and modified, some of which place emphasis on different aspects of student attitudes toward science (Osborne et al., 2003). Thus, although considerable work has been carried out to validate or modify existing and commonly used instruments such as the Test of Science Related Attitudes (TOSRA; Fraser, 1978; Potvin & Hasni, 2014; Villafañe & Lewis, 2016) and the modified Attitudes toward Science Inventory (mATSI; Osborne et al., 2003; Weinburgh & Steele, 2000); as well as create new instruments (Unfried et al., 2015), questions remain about the definition of subcomponents.

For instance, Kind et al. (2007) focused on the development of attitude toward science measures, and identified six a priori separate attitudes to science measures (learning science in school, self-concept in science, practical work in science, science outside of school, future participation in science, and importance of science). While their work confirms the unidimensionality of these six “attitudes”, they also found that three of these measures (learning science in school, science outside of school, and future participation in science) loaded onto a single more general “attitude toward science” construct, based on a further analysis. This result raised questions regarding the attitude toward science construct and subscales. It is clear, however, that any identified constructs of student attitude toward science must be considered unidimensional, or in other words, measure the same thing (Lumsden, 1961).

With the existence of many attitudes toward science scales with different subscale components, it is still unclear whether researchers are measuring the same aspects of student attitudes towards science, which complicates comparisons between studies (Barmby et al., 2008; Osborne et al., 2003). This uncertainty makes it even more important that when developing “student attitudes toward science” instruments researchers not only pay close attention to the alignment between the items used to measure constructs, and the objects (i.e., school science curriculum or school science teacher) of particular attitudes being measured, but also to the unidimensionality of the proposed attitudinal constructs and by extension the construct, convergent, and discriminant validity of the measured subscales. As the identification of subscales of attitudes toward science can be expected to vary based on the object and specificity identified, we do not challenge the identification and use of various subscales, but rather note that additional quantitative techniques should be used when considering attitude toward science instruments and subscales.

One technique that can be used, along with conceptual consideration of items used to measure attitude toward science constructs, is factor analysis, which can be used to explore the unidimensionality of underlying constructs (Lumsden, 1961). Quantitative indicators of unidimensionality provide support that a cluster of items are reliably measuring a true construct. Thus, this type of evidence is important to establish what subscales, if any, should be included in a measure of attitudes toward science. Attitude toward science constructs, both overall and subscales, should ideally have theoretical and quantitative evidence of unidimensionality. This is especially relevant to the study of attitudes toward science given the variety of existing attitude toward science instruments and subscales. Osborne et al. (2003) and Kind et al. (2007) suggest that researchers should utilize factor analysis in addition to internal consistency measures such as Cronbach’s α. The unidimensionality of measured attitude toward science subscales is not an object that the internal consistency confirms, but is an assumption of computing the internal consistency. The Kind et al. (2007) study described above is an example of how factor analysis can be used to clarify subscales based on the underlying factor structure of attitude toward science instruments. If only internal consistency measures are employed and unidimensionality is not confirmed, there is a possibility that although items are highly correlated they do not measure a conceptually similar and meaningful construct (Osborne et al., 2003). Thus, we argue that it is important to begin not only with attitude toward science constructs shaped by considerations of conceptual meaning, theory, and previous studies; but also to confirm the dimensionality of identified constructs through the use of factor analysis.

Factor analysis should also be employed to verify validity of instruments when used in new studies with a unique sample of participants (Kind et al., 2007; Munby, 1997), especially considering that many instruments were validated using samples composed predominantly of white high school students (Weinburgh & Steele, 2000), and instruments have been shown to behave differently based on some background characteristics such as race/ethnicity (Villafañe & Lewis, 2016; Weinburgh & Steele, 2000). Thus, additional measurement invariance analyses are also necessary to determine whether identified constructs perform in the same way for subgroups of students based on background characteristics such as race/ethnicity, gender, socioeconomic status, and age/grade level. If a study utilizes longitudinal data, longitudinal invariance should also be established (Khoo et al., 2006; Meredith & Horn, 2001; Millsap & Cham, 2012). Additional longitudinal studies will help to elucidate how student attitudes toward science change over time and are a particular focus of science education researchers (Osborne et al., 2003) and policymakers given the implications for overall science engagement and eventual career participation.

An instrument of particular interest is the modified Attitudes Toward Science Inventory (mATSI) instrument. The mATSI is a shortened and modified version of the original Attitudes Toward Science Inventory (ATSI) developed by Goglin and Swartz (1992). While the original ATSI instrument sampled college students, the mATSI was developed for elementary school children. The mATSI instrument has been a mainstay of student science attitude research since its introduction in 2000 due to its modest length and well-established validity and reliability (Weinburgh & Steele, 2000). Since 2000, it has been used in a variety of research studies (e.g., Akrsu & Kariper, 2013; Buck et al., 2009, 2014; Cartwright, & Atwood, 2014; Hussar et al., 2008; Junious, 2016; Weinburgh, 2003). This instrument includes five attitudes sub-scales: (1) perception of the teacher; (2) anxiety toward science; (3) value of science to society; (4) self-confidence in science, and (5) the desire to do science (Weinburgh & Steele, 2000).

Researchers should confirm the validity of a particular instrument for the unique sample of students before embarking on new analyses (Kind et al., 2007). With these considerations and concerns in mind, Weinburgh and Steele (2000) examined the reliability of the existing instrument (which was previously explored using a sample of predominantly White students) with a participant pool of 1,381 fifth grade students with a higher representation of African American students (n = 658) compared to White students (n = 723) when developing the mATSI. Thus, they were able to confirm that the instrument was appropriate for measuring the attitudes toward science of younger students and African American students by confirming internal consistency (Cronbach’s alpha) levels above 0.50 for Black students, White students, male students, and female students. The authors also shortened the original instrument to make administration of the survey less time consuming, and more appropriate for younger students.

With the aforementioned considerations for the generation of reliable and valid attitudes toward science instruments in mind, we aimed to extend the work of previous researchers and modify and shorten an existing instrument, the mATSI, to be used to measure aspects of attitudes toward science in a large, racially/ethnically diverse, longitudinal sample. The mATSI was chosen based on: (a) its suitability for younger children; (b) its previously established validity for reliability across gender and racial/ethnic groups; (c) its modest length of 25 items reduced from a previous version of 48 items, and (d) its broad use in science education research. In our study, elementary and middle-school students were surveyed, so the suitability for younger students was important, as well as the short length, as the mATSI items were included along with other survey items. We note the importance of and employ the following techniques; re-examining the reliability and validity of instruments with unique samples of students and across demographic subgroups of interest, using factor analysis to critically examine attitude toward science subscales, and finally establishing longitudinal measurement invariance of the instrument for future use in longitudinal studies. The modification of the mATSI using these techniques improves upon a well-established instrument through the confirmation of reliability, validity, and measurement invariance across gender and racial/ethnic groups as well as over time. This establishes that the instrument is still valid among a more recently sampled population, as well as demonstrating that it can be used reliably in longitudinal studies. Furthermore, the instrument is further reduced making it convenient for combined use with other instruments, which allows researchers to expand the scope of their inquiry.

The mATSI was administered as part of a larger longitudinal survey to a sample representing multiple schools and school districts and racial/ethnic groups. Due to the differences of this sample as compared to the sample that Weinburgh and Steele used when validating the mATSI survey, the five identified “attitude toward science” constructs were re-examined using both theory and factor analysis. In this analysis, the analysis focused on the mATSI response data from 2016 students in 6th (n = 996; 49.4%) and 7th grades (n = 1020; 50.6%) over four semesters starting in the Fall 2012 semester. In this longitudinal study, measures were repeated over three additional semesters spanning a total of two successive academic years. The analytical approach applied in this study divided the whole sample into two randomly selected half samples, one half to be used with the exploratory factor analysis as a “training sample” and the second half (referred to as the “holdout” data) to be used with a confirmatory factor analysis. An exploratory factor analysis (EFA) was employed to determine how many underlying factors emerge from the mATSI items, because the items may not be aware of the scale for which they have been written (Bandalos & Finney, 2010). In this EFA, the conceptual alignment of the items with the factors based on the number of emergent factors, correlational values, and factor loading scores were considered. Utilizing cross-validation, confirmatory factor analysis (CFA) was applied to confirm the model fit within a half holdout sample. It is important to note that the performance of mATSI items were examined carefully in this particular sample, which is more racially/ethnically diverse and includes a wider age range as compared to the Weinburgh and Steele (2000) sample. Finally, the stability of the factor structure trimmed via EFA and CFA over four semesters by conducting longitudinal measurement invariance tests was confirmed. Overall, this study aimed to (1) examine the dimensionality of the five attitudes toward science constructs identified for the mATSI instrument in regards to our particular sample of students using factor analysis and existing theory; (2) measure the factor reliability estimates of the constructs identified, and (3) investigate whether the identified constructs can be measured consistently over time periods using longitudinal measurement invariance analysis.

Data used in this study were obtained from a survey administered over four time points for 2 years, asking for students’ opinions about many statements regarding their preferences for learning activities and attitudes toward science learning. The 2016 survey responses from 6th graders (n = 996) and 7th graders (n = 1,020) were considered in totality in this study. The survey was administered at four different time points: Fall 2012, Spring 2013, Fall 2013, and Spring 2014. The Fall 2012 sample contained roughly equal percentages of participants identifying as male and female (52.6% male; 47.4% female). Participants self-reported their racial/ethnic identity in Fall 2012 as: 48.9% White, 14.2% African American, 18.2% Hispanic, 2.3% Asian, and 16.3%, with multiple racial/ethnic identities (Table 1). Less than 1% participants reported themselves to be American Indians. There were similar demographics between 6 and 7th grade participants (Table 1). These values do not include unreported responses, which comprised 24.1% of the sample.

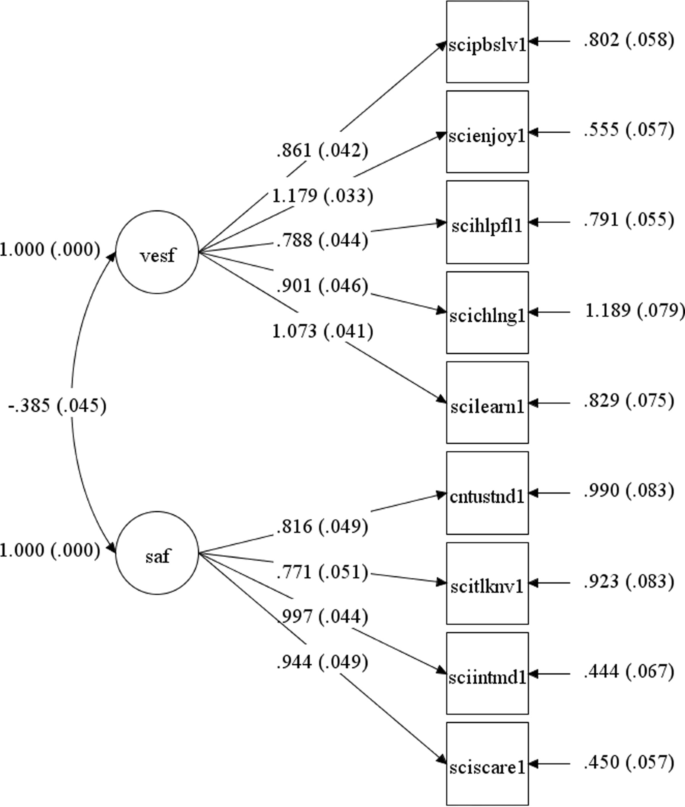

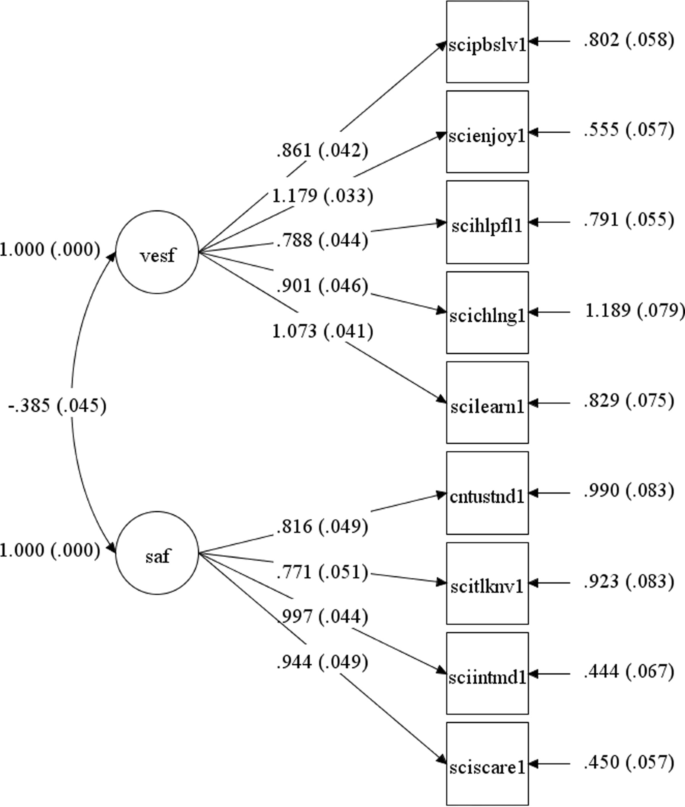

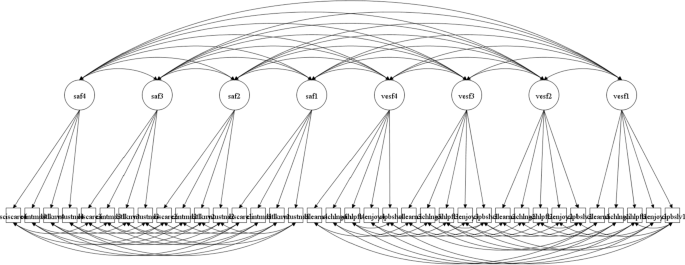

The best-fitting model of attitudes toward science for the longitudinal data of 6th and 7th graders was a two-factor model. The model also holds the longitudinal strict invariance. The factor loadings and their standard errors are listed in Table 5. Factor correlations, variances, and latent means of two-factor model under the longitudinal strict invariance are further provided in Table 6.

Table 5 Factor loadings and their standard errors: parameter estimates of two-factor model under the longitudinal strict invariance

Table 6 Factor correlations, variances, and latent means: two-factor model under the longitudinal strict invariance